FOIA Files: The University of Washington

The University of Washington, through its Center for an Informed Public, has played an outsize role in shaping "anti-disinformation" campaigns.

How do “anti-disinformation” researchers know what “disinformation” to resist? How do they decide what is and is not true?

This past May, Racket received some new FOIA results from the University of Washington and its Center for an Informed Public. Since its inception in December 2019, the CIP has sought to “translate research about misinformation and disinformation into policy.” Spearheaded by Professor Kate Starbird, the CIP analyzed the spread of online “misinformation” during the COVID-19 pandemic, partnering with medical professionals in an ostensible effort to dispel vaccine hesitancy among expecting families. Later, it put together a report on the most influential Twitter accounts shaping discourse around the Israeli-Palestinian conflict. More recently, it’s been working on an information literacy curriculum meant to help librarians “address and navigate problematic information in their local communities.”

Racket has filed multiple FOIA requests with the Center. Recently we received several productions. The first reached us in June, when we received over 1,000 pages of emails and documents. These further illustrate the CIP’s extensive influence, multitude of projects, and role in helping form initiatives like the Alliance for Securing Democracy (ASD) and the Election Integrity Partnership (EIP). We’ve uploaded all of the documents to the Racket FOIA Library, where you can access them for free.

One of the key issues in the emails pertains to the question posed above. What system do “anti-disinformation” researchers use to settle on the “false and misleading” narratives for study? If you guessed “not much of a system at all,” it appears you’d be right.

On February 10, 2021, lawyer Eric Stahl of Davis Wright Tremaine reached out to Julia Carter Scanlan, the director of strategy and operations at the CIP, with questions about a draft of a final report written by the Election Integrity Partnership, which included the CIP. Stahl had been tasked with conducting a legal review before publication. In his correspondence with Carter Scanlan, he asked an obvious question: “How do the authors of the EIP determine that a statement is false/disinformation or worthy of a ‘ticket’?”

Stahl’s concern was simple: What if someone claimed they’d been falsely accused by the EIP of “tweeting disinformation”? He then explained to the “anti-disinformation” researchers the negligence standard, known to all journalists: If a reporter “checks their facts, pursues reasonable leads, and doesn’t ignore relevant sources,” he or she is off the hook for defamation.

You can get things wrong, but you have to at least attempt to check facts. In that vein, he said, “it would be helpful to know how you decide something is false,” and if there is an “articulable standard.”

The more pertinent revelations are summarized below, but among a number of eyebrow-raising issues, the headline disclosure appears to involve the lawyer Stahl’s above query: How do anti-disinformation authorities decide what’s disinformation? The response raised numerous questions.

UW’s Starbird, one of the most well-known players in the anti-disinformation space, first responded to Stahl by pointing elsewhere. The process for “determining veracity,” she wrote, took place “outside of our analysis team at UW… it was handled by the Stanford team”:

All of this is confusing because, as Stahl noted, the EIP, in announcing itself, said outright it was “not a fact-checking partnership.” Still, someone must have chosen material to be ticketed and studied as misinformation. But who? And how?

Racket readers will recall the four major partners in the EIP were Stanford, the University of Washington, the defense-funded firm Graphika, and the Atlantic Council’s Digital Forensic Research Lab (DFRLab). The Partnership focused on identifying, countering, and documenting actors who promote misinformation and disinformation. Founded in July 2020, the EIP “tracked voting-related dis- and misinformation and contributed to numerous rapid-response research blog posts and policy analyses before, during and following” that year’s presidential election. The consortium shuttered after the 2022 midterms.

Starbird told Stahl that the “veracity” issue was handled at Stanford; another email in our FOIA results show her writing to Renée DiResta and Alex Stamos of the Stanford Internet Observatory to share his feedback. (Stamos previously served as chief security officer at Facebook.) She explained that the lawyer was asking for “more content about how we vetted and determined if a piece of content or claim was misinformation or disinformation”:

Racket reached out to Starbird for clarification. Asked how the EIP determined what was and was not disinformation, she replied (emphasis ours):

My understanding is that the EIP did not do independent fact-checking of specific claims, but that students were instructed to, where possible, find and log links to external fact-checking and local media articles that would provide context for other researchers to understand a claim.

This suggested that when the EIP did any kind of fact-checking, it was by students after material had already been identified. We’re still waiting for further responses from Starbird and others, but it’s obviously a source of confusion if the methodology for choosing material to be ticketed or even studied as misinformation didn’t involve an investigatory process. Later in this story, the issue reappears in other ways.

On a related note, the first chapter of the draft provided to Stahl includes a graphic titled “Four Major Stakeholder Groups.” That illustration provides an overview of the EIP’s most important collaborators.

A different version of that graphic appears in the copy of the report that was released to the public. But in that version, the EIP omitted any mention of its partners in media: The Washington Post, The New York Times, and First Draft News. At the same time, the official version of the report made no secret of the EIP’s collaboration with government actors like the Cybersecurity and Infrastructure Security Agency (CISA) and the State Department’s Global Engagement Center (GEC).

When reached for comment, New York Times managing director Charlie Stadtlander wrote:

The New York Times has no relationship with the group other than as a media organization that has covered its work.

Speaking on background, a spokesperson for The Washington Post offered the following response:

…I want to flag The Washington Post’s exclusive reporting in September 2022 examining accounts that rose to prominence spreading disinformation about the 2020 election that then continued to drive other polarizing debates. You’ll note The Washington Post’s methodology at the bottom, which details how the analysis was conducted.

The methodology highlights how The Washington Post identified the biggest spreaders of disinformation about the 2020 election, culled from databases including those from the Election Integrity Partnership in addition to the Technology and Social Change Project at Harvard’s Kennedy School and the Social Technologies Lan [sic] at the Jacobs Technion-Cornell Institute.

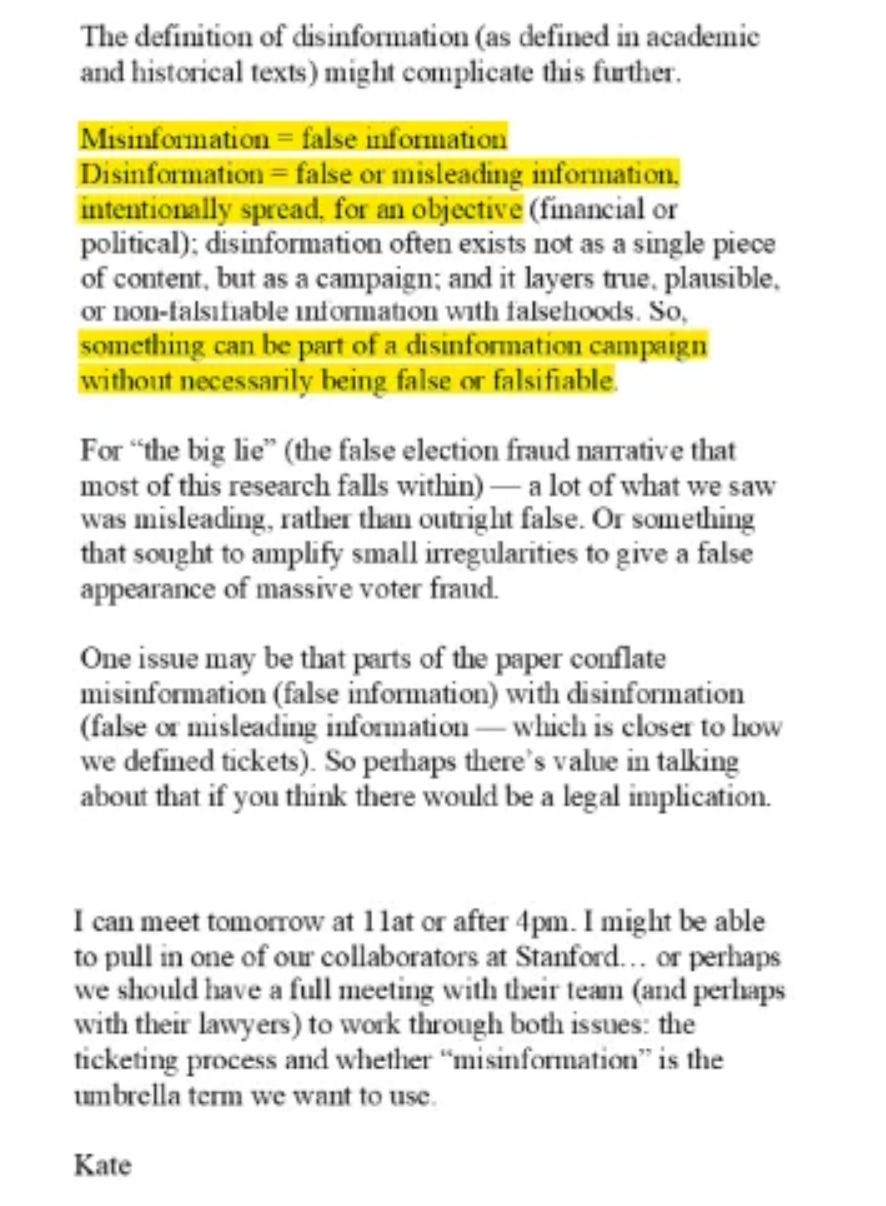

Before moving on, it’s worth noting that in her letter to Stahl, Starbird also offered industry definitions of misinformation and disinformation. The short version is that misinformation is false, while disinformation is false or misleading (a concept that may or may not involve false information).

Starbird used to sit on the Department of Homeland Security’s Misinformation, Disinformation, and Malinformation Subcommittee. The DHS previously defined malinformation as “based on fact, but used out of context to mislead, harm, or manipulate.” Here, Starbird defined disinformation as “false or misleading information, intentionally spread, for an objective… exists not as a single piece of content, but as a campaign… layers true, plausible, or non-falsifiable information with falsehoods… something can be part of a disinformation campaign without necessarily being false or falsifiable.”

When asked if this meant that of the three categories, only misinformation definitely included falsehood, Starbird said no:

Both misinformation and disinformation are necessarily false or misleading, but they are often best operationalized with a “unit of analysis” that is at the rumor or campaign level, not as an individual statement or social media post.

This answer too complicates matters. In journalism, facts aren’t demerited because someone might use them to make an unwelcome argument. Things are merely true or not. Starbird’s worldview seeks to replace the relatively uncomplicated journalistic standard of true or untrue in a way that potentially forces a fact to pass multiple tests before being judged fit to reach audiences. Is a thing true but potentially misused? What expertise allows a person to answer that question?

On June 5, 2017, Laura Rosenberger reached out to Starbird with an intriguing offer. Rosenberger’s résumé is too illustrious to fully capture here, but she previously served as an advisor to such figures as Antony Blinken and William Burns, and was the chief foreign policy expert for the Hillary for America campaign in 2016. In 2017, she and her colleagues at the German Marshall Fund were in the process of launching the Alliance for Securing Democracy (ASD), an initiative that would “convene experts across different sectors” with a focus on “developing comprehensive strategies to defend democracy against Russian assault.” This was one of the first crossover agencies merging Clinton Democrats with Bushian neoconservatives, and it would become the hub responsible for the infamous Hamilton 68 site exposed in the Twitter Files.

As Racket readers know, Hamilton 68 would be referenced as a reliable source by nearly every mainstream news organization in the country over the next years. MSNBC alone cited stories sourced to the now-defunct dashboard on 279 separate occasions:

Starbird’s correspondence with Rosenberger lays bare a fallacy at the heart of the entire “anti-disinformation” industry. The ASD’s purported mission was to challenge the legitimacy of the 2016 presidential election by tying the outcome of that race to Russian disinformation. The Alliance was launched in July 2017, right as the Russian collusion narrative was gaining momentum. Rosenberger, the organization’s inaugural director, was a Hillary for America alum who currently chairs the American Institute for Taiwan. (Prior to that, she served on the National Security Council under Presidents Biden and Obama.) “Anti-disinformation” research is, in practice, always a partisan endeavor.

The issue of who is and is not qualified to identify disinformation comes into even greater focus when the informational offense hasn’t even occurred yet. Some of the emails in this FOIA batch outline the EIP’s involvement in a campaign proposed by Beth Goldberg of Jigsaw (formerly Google Ideas). On March 1, 2023, Goldberg reached out to Starbird, DiResta, and several other academics to discuss an effort to “prebunk” election-related conspiracy theories. Goldberg proposed a strategy in which she and her colleagues would catalog the most prevalent “misinformation tropes” and have a creative agency produce videos “prebunking” those tropes.

In a subsequent email, Goldberg shared a list of tropes they could test. Among the candidates were “tricky ballots,” “mysterious late ballots,” “ballot mules,” and “vote drops/spikes.” She suggested that they have at least two videos prepared for a combination of battleground primaries and less competitive primaries and advised against creating videos for specific states, lest they be accused of partisanship. Those videos would then air as YouTube ads, with “university partners” using the platform’s survey function to test how viewers responded to the information. Goldberg even suggested choosing a primary race as a pilot test for the project and using that race to predict the “misinformation tropes” that would be most likely to gain statewide traction. As far as “contracting and payment arrangements” were concerned, she floated the possibility of having Google pay for the production of the videos.

On March 27, Starbird told Goldberg that she and research scientist Mike Caulfield would “co-lead” the project on behalf of the University of Washington. She stated that they didn’t expect to be compensated for their efforts, adding that they would “fund [their] time” using existing “philanthropic sources.” Among those sources were the Knight Foundation, the Hewlett Foundation, and Craig Newmark Philanthropies. (The CIP is also funded by the National Science Foundation, the Omidyar Network, and…Microsoft.) Starbird also emphasized that they didn’t want the proposed YouTube ads to be underwritten by any entity affiliated with her university or the CIP.

We asked Goldberg if she could shed some additional light on Jigsaw’s proposed “prebunking” efforts. A spokesperson for Jigsaw responded to our request for comment with the following:

This technique would have helped people spot common manipulative practices, and was similar to projects requested in Europe. The goal was to identify nonpartisan techniques that were clearly manipulative. In 2023, we explored running a test in the US, but as we confirmed many months ago, never did, and have no plans to do so in the US.

Jigsaw may not have produced any election-oriented “prebunking” videos, but they did create a handful of public service announcements meant to discourage vaccine hesitancy. Here’s one example.

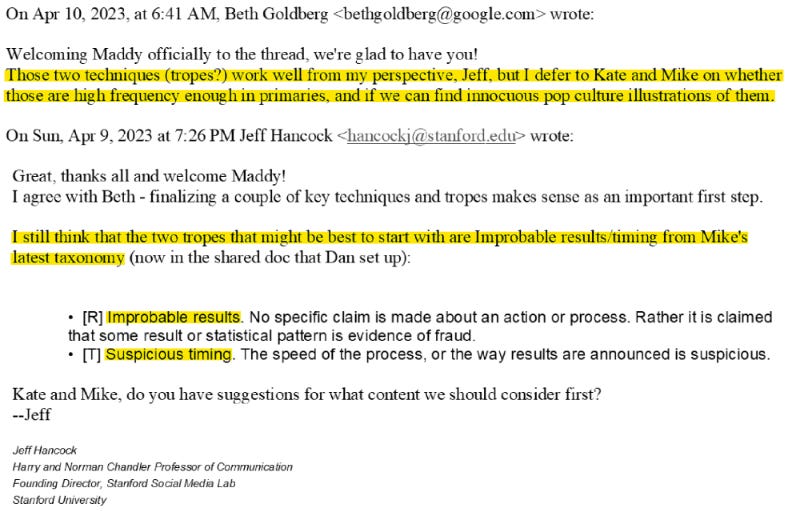

On April 10, Jeff Hancock, the founder and director of the Stanford Social Media Lab and the SIO’s faculty director, proposed that they test two election-related tropes: “improbable results” and “suspicious timing.” Goldberg responded by questioning if those tropes were “high frequency enough.” She also seemed aware of the fact that they’d be unlikely to find “innocuous pop culture illustrations” of such phenomena.

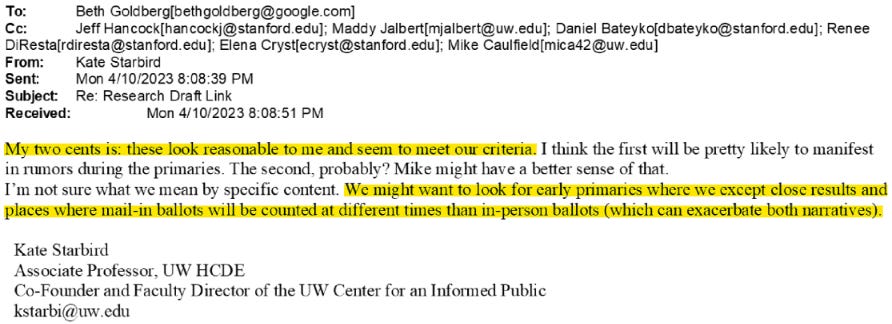

Later that same day, Starbird voiced her approval for using the two tropes Hancock selected and made the following recommendations:

We might want to look for early primaries where we except [sic] close results and places where mail-in ballots will be counted at different times than in-person ballots (which can exacerbate both narratives).

Starbird’s comments almost seemed to anticipate HAIWIRE, a role-playing game designed by the CIA for the purpose of preparing intelligence officers for various information crisis scenarios. (Matt Taibbi and Walter Kirn discussed the game on a recent episode of America This Week.) The first scenario Starbird described is essentially the inverse of “THE PURPLE DISAPPEARED.”

Similarly, Starbird’s suggestion that they use a competitive primary hints at the group’s goal of “prebunking” any conspiracy theories that might emerge when a race that was anticipated to go one way ended up yielding a different outcome. Much in the same vein, her second recommendation seems geared towards explaining how later tabulation of mail-in ballots might result in suspicious-looking spikes for one candidate.

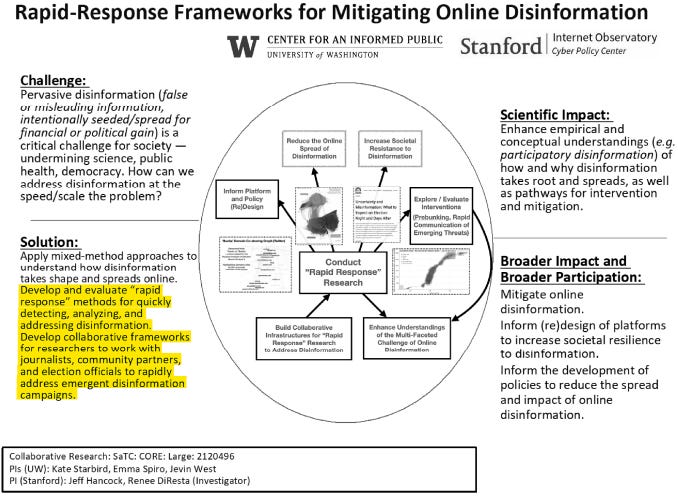

This practice of “prebunking” election-related conspiracy theories before they can go viral is consistent with a graphic put out by the CIP and the SIO, titled “Rapid-Response Frameworks for Mitigating Online Disinformation.” Notice how their “solution” entails the use of “‘rapid response’ methods for quickly…addressing disinformation.” The graphic also alludes to the EIP with its reference to “collaborative frameworks for researchers to work with journalists, community partners, and election officials.”

On September 27, 2022, Microsoft director of information integrity Matthew Masterson made contact with Starbird. Masterson is affiliated with Democracy Forward, a Microsoft “corporate social responsibility” initiative aimed at “safeguarding open and secure democratic processes, promoting a healthy information ecosystem, and advocating for corporate civic responsibility.” Prior to that, he worked as an advisor to CISA and held a fellowship at the SIO.

Masterson asked Starbird if she’d be willing to brief his team on her work with the EIP, citing her ability to “deepen the community’s understanding of the current trends and challenges in the [information integrity] space.” He communicated his colleagues’ desire to hold the briefing prior to that year’s midterms and even invited her to bring along her counterparts at the SIO.

Masterson’s email namechecks Clint Watts, a former FBI special agent and consultant who heads Microsoft’s Threat Analysis Center. Watts is also a fellow at the ASD and a national security contributor to NBC News and MSNBC. His career is a testament to the symbiotic nature of the relationship between tech giants, federal law enforcement agencies, the news media, and “anti-disinformation” research organizations.

These excerpts offer only a glimpse at the University of Washington’s influence in the “anti-disinformation” ecosystem. The Center for an Informed Public’s dalliances with the Alliance for Securing Democracy and the Election Integrity Partnership highlight some of the university’s most high-profile collaborations. Perhaps more than anything, the CIP’s reach serves as a test case in the role “anti-disinformation” research groups play in spawning and facilitating more collaborative organizations. And even as some close their doors, others are sure to pop up and take their place.

Here are a few more highlights from this latest FOIA production. Among the disclosures are the CIP’s communications with a member of the House Select Committee on the January 6th Attack, as well as emails describing the EIP’s efforts to monitor election drop boxes and the CIP’s plans to collect data from Telegram.

On January 13, 2022, Professor William Scherer of the University of Virginia contacted Kate Starbird, Renée DiResta, and Alex Stamos. At the time, Scherer was serving as chief data scientist for the House Select Committee to Investigate the January 6th Attack on the United States Capitol. Scherer offered to set up a meeting in which the trio could explain their work with the EIP to “other fellow senior members of the committee.” (See page 395.) On January 21, Starbird provided Scherer with a paper outlining their methodology for “identifying repeat spreaders of election misinformation.” However, she noted her reluctance to share a list of 150 Twitter influencers they were monitoring. She claimed that the list included accounts that were correcting misinformation. (See page 394.)

On November 2, 2022, Joel Day, then the research director at Princeton University’s Bridging Divides Initiative, reached out to the EIP. (Day is currently managing director at the University of Notre Dame’s Democracy Initiative.) Day said he was “working with partners to track all planned and actualized incidents at drop boxes throughout the country.” He cast doubt on the veracity of election fraud claims made by conservative activist group True the Vote and asked the EIP if they could share any drop box trends they had noticed. (See page 330.)

On February 9, 2023, University of Washington engineer Lia Bozarth reached out to Daniel Bateyko of the SIO to ask him about the Observatory’s Telegram data collection pipeline. Bozarth described how the CIP was “in the process of building up infrastructure to support collecting data from Telegram” and hoped to “provision more phones to expand [their] data collection.” She also asked Bateyko a few questions about overcoming rate limits, sampling channels, and downloading media from the platform. (See page 212.)

Feel free to peruse the latest disclosure from the University of Washington’s Center for an Informed Public by clicking here.

I've been saying it since the Twitter Files first dropped -- the bigger story isn't the fact there's a Twitter Files, the bigger story is there's also a Google Files, an NBC Files, a New York Times Files, a Facebook Files, etc etc. And now we know there's a UW Files.

Nearly every 'important' institution in the country was coordinating with government to suppress the legal speech of Americans -- and sooner or later we'll see it all.

This is the first time that I can point out that government, media, corporations, universities, and the elites have all line up against the common citizenry in this country and the effects are astonishing. It's not even an uphill battle to fight it, it's like scaling a cliff we've already fallen off of. The propaganda is strong and the gaslighting is so frequent we may actually run out of gas. I honestly don't know what to do except maybe disconnect from it all and enjoy the last little bit of freedom while we still have some.